The Qacchua Newsletter: June 2025

Interesting things - mostly work related - that I saw last month

I have been traveling on work and vacation this past month, and so haven’t gotten around to reading as much as I usually do. I wasn’t even sure if I was going to do this feature - I am still on vacation - but then I just started this feature in May, so it would not be a good look if I bunked on the second try itself. Plus, I didn’t read as much, but that doesn’t mean that I did not read at all.

So that said, here are the best things I have read this month.

For almost 20 years now, I’ve hired people. I’d like to think that one look at a resume, and I get a decent idea of whether they’d be a good fit at the role. This is even more predominant if I get a chance to interview them. Hiring the right candidate for a job gives a sense of satisfaction that is unique in the corporate world.

But the downside to this, is that (generally) you can only hire one person at a time. For every successful candidate, there are about 10x the number who did not get that job. What do you tell those 10 people? I have myself been at the receiving end of the feedback loop multiple times, where someone told me that I did not get the job I was hoping for. This feedback is important to me, and I am sure it is important to everyone else, to know what they could do differently. But the feedback can also be a little counter-productive. I’ve had interviews where the feedback was that my interviewed showcased that I was strong in X (fill in the competency here) but the job needed someone with some capabilities in Y. Which is fine, but three months later, for a different job, I was told that I was great at Y, but the job needed Z. This has also been a constant complaint of a lot of people that I’ve coached through their career process.

The feedback made no sense.

Coaching through this can be tough, and it depends on case to case. Acing a job interview is like competitive golf - you can only do the best you can possibly do, but there’s always the chance that someone else mastered that course better than you. The has an excellent article that helps make sense of the incongruence of the whole hiring process. It is behind a paywall, and I am not a paying member, but the amount before the paywall itself is so clear, that I am now going to reference that instead of having to come up with reasons why someone did not make the cut.

I recently found to be an excellent source for what one can do to be prepared - individually and also societally. It is an India-based publication, so the articles are generally India focused, and hence they capture my interest even more. It brings some learning in quick and easy paragraphs along with some review material. Excellent newsletter that we should keep an eye out for.

I found one of their recent posts quite illuminating on what India needs to do to stay abreast of the changes in technology. India’s service sector is built on the cost arbitrage model. As AI-agents start taking over, they will become commoditized, and possibly India’s cost arbitrage model will kick in to build new agents. But what happens to the society which has built its foundation on the service sector jobs. Newer jobs need to be created and defined that are in touch with how the customer side is changing. I especially loved this paragraph

Similarly, India needs to manage this transformation, and the hard truth is that the responsibility lies with each one of us. Businesses will move their operations to where they can make more profit and grow stakeholder value. India will need to build ‘smart cities’ with the right digital and social infrastructure that can house digital twin specialists, smart factory engineers, etc.. All of them first need to have a deep and extensive domain knowledge of managing high-value jobs remotely. Workplace professionals of the future need to compete with the world’s best not on price but on value.

As someone whose career grew through the cost arbitrage model, I found myself nodding in agreement through the entire article, so thought I’d pass it on to everyone.

Speaking of jobs these days, and my current favorite topic of AI.

It is my favorite, not because I am good at it - my core competency less Artificial Intelligence, more Natural Stupidity. But more so, because a lot of what I read about the powers of AI doesn’t really make sense (to me). That said, whether it makes sense to me or not is irrelevant, because it is coming. Time will tell if it is a tsunami or a raging tide, but if I am not prepared for what’s coming, that’s more on me than on anyone else.

Someone sent me this image of ’s 2025 career advice. I don’t know if this is true or not, but it seems fairly authentic and kind of sounds like Mr. Andreesen’s tone. I mean some AI could have written it, or maybe he spoke about it somewhere in a podcast or something, and then some generic AI chatbot summarized it in succinct bullet points, but I mean why let facts come in the way of a good story? Whatever. Here it is for your reading pleasure!

After pondering for sometime whether this was genuine, the first question that came to my mind was how is this any different from career advice someone would have given me 25 years ago when I was starting my career? or 50 years ago when my late father was starting his? Substitute AI with “coding” and it sounds very similar to advice I would have got in the year 2000. Everyone and their grandma was going to become a software engineer - thanks to the Y2K boom. My first job out of college was that of a software engineer, something I struggled with for a year or so and then I got out. Mechanical engineering majors were not so glamorous careers, and computer science majors were the cat’s whiskers. Now, it turns out that AI can code for itself, and people still need to design and manufacture stuff.

The more things change, the more they remain the same.

Speaking of things that remain the same, one thing that remains the same from the last time I published this roundup is putting in a post by . If you haven’t subscribed to his newsletter, you are missing out on a healthy dose of skepticism in this era of “Executive FOMO”, especially in the AI space.

A lot of this hype is being created by the consulting space. Executives trying to stay relevant without really knowing the depths of the businesses that they run, end up creating a knowledge vacuum. Mostly this is because they know WHAT they want, but they really don’t know enough about their tools - they haven’t really used them personally, have they? - to be able to get to that place. So in this vacuum step the consultants of the world - McKinsey in this case being just an example - to “analyze” their need and give a quick solution. Often, in their rush to come up with a solution, they come up with a 2D-solution - they don’t consider “depth”. They use theories used in management school and a Pareto version of identifying solutions for 90% of scenarios and AI works just fine. The problem is most large enterprises now have enterprise software which works seamlessly for the 90% scenarios, and have humans in place for the 10% edge cases that are too few to try and automate. “McKinsey” says this AI will replicate the human activity, and is implemented (no one is fired for going with McKinsey). But it fails.

It fails for two reasons - a) the training data is too little and b) the process is not the most efficient. It is modified to include the human in some way. The human can “manage” the wasted effort, but the automation cannot. So now the automation creates that wasted effort, faster and quicker. So the payoff from faster execution is washed away by the incorporated waste.

And so we end up with outcomes like 80% adoption, 0% impact on EBITDA.

This is not to say that AI is useless. It has an immense amount of use, and can definitely transform the way we do things. That said, it is my strong belief, that AI will benefit those who are clear on two things - adequate depth in their process to know the gaps that AI can fill, and that this is not going to be an “Agile sprint” or two. To do that, you need to understand what you’re talking about. Where the problems are, what the “process” is, what is it designed for, what are the problems it was originally trying to solve. Or as Marc Andreesen may or may not have said “Be so good, they can’t ignore you. Focus on getting excellent at your craft”.

In Friedman’s words -

McKinsey’s agent thesis is not wrong so much as it is dangerously incomplete. Yes, copilots are UX glitter. Yes, agents are the next wave. But this isn’t a linear march toward productivity. It’s a strategic trench war, fought across the stack: infrastructure, data access, ops design, and internal resistance.

Anyway, rant over. Onward and upward.

You and I are not really at that level where we can define “what is and what should never be1”. Rather it’s ours not to reason why, ours but to do and die. We don’t get to define if and where AI can be used, instead you (like me) are probably just being told by your bosses that you should use AI in your daily work. And your question is - Where and how do I get started?

This is the era of the internet, and so you will find gazillion stuff on the internet that will answer you that question. But in my use of the LLM-fied space of my work life, I had struggled in finding the correct use case for ChatGPT / Gemini / Copilot / etc. Answers seemed vague and kind of forced. Plus there’s always the fear of “AI hallucinations”. So I moped around, checking the boxes but never really figuring out why and how this was such a big deal.

Until I started following . Turns out I needed to not be so deferential to the LLM. I just had to do what always did - treat it like a manager talks to an employee! I can do that! I have done that for years every day.

This mental model definitely has helped me in my interactions with the LLM-world. My prompts are a lot more descriptive and I now set the stage a lot more definitively. The instructions are a lot clearer, and it’s now more of a conversation than an ask. Individual contributors using LLMs need to now “think” like their managers and ask accordingly.

This brings up an interesting aspect, that I hope I will be able to write on in a subsequent post. I read a decent amount of material in this space, and I keep hearing about how o3 is better than DeepSeek, but is not as good as Gemini 2.5 Pro2. All that is fine, but I really don’t know enough of the technicality to know the difference. In my daily use, as I am sure in most of yours, you could use all these phrases interchangeably, and my life would be not that different. I cannot tell how one LLM is that much different from another.

At some point, this is going to be the case for every LLM. The amount of data on the planet is ginormous, but not infinite. There’s only so much that they can train on. Every LLM will neutralize the other. What then becomes the competitive advantage for organizations? How will they maintain their edge, if the AI-models are all the same?

Wait! Could that be its employees?

You’ve probably figured out by now that I am an AI-skeptic. Unfortunately, in this era of 2D labels, the word “skeptic” suggests that I doubt the power of AI. I do not. I am sure that AI can solve all the problems that until now were considered the domain of a God3. At which point, AI will be "God” and there will be the believers and the non-believers. has an interesting post on the two cults that are forming and will possibly morph into their own religions soon.

I found this section particularly interesting

But has it really grown? As I’ve pointed out before, these kinds of gnostic AI beliefs are millennia old; transhumanists were beefing with AI simulationists 1800 years ago. It’s certainly possible that, net, more people are delusional today than would have been without the existence of ChatGPT. It’s also possible that the number of deluded is essentially unchanged, and all we’re seeing is a growth in the relative popularity of this particular AI-spiced flavor. It’s even possible that the net number of deluded has shrunk. Articles like those above are good for posing such questions, but alas very bad at answering it.

Like I said, the more things change, the more they remain the same.

And finally!

Speaking of my AI-skepticism and my own doubts. I do not doubt the power of AI as a technology. What I doubt is the words and intentions of the people telling me to use AI to solve all my problems. As someone who has an engineering background, I can make the case that these intentions are noble. Engineers are trained to always aim to solve problems. We want to solve every single problem on the planet. We will invent problems if we need to, so that we can sate our desire to solve problems. To me, the problem now is that the money involved is such, that people have an incentive that needs me to use AI. They benefit financially from my use of their technology. Which makes me look at their statements with a bit of skepticism.

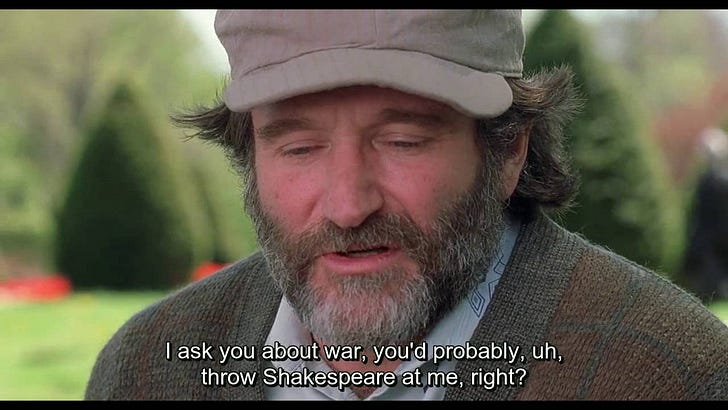

The point here is that AI is great. But it is very 2-dimensional. I was going to write a lot more on that - maybe in a separate post. And then I remembered that this scene from the movie Good Will Hunting covers my feelings well. So instead of boring you with more words, this might be a good way to end.

As always, would love to hear your comments and thoughts on this newsletter feature.

One of my cheap thrills is to pull in a Led Zeppelin song in a professional setting. Achievement Unlocked

Just an example. I don’t really know. Plus in this day and age, I could make up a statement, and find something online that would provide me the necessary evidence.

I’ve often held this belief that our evidence of a God is essentially low-probability events that we cannot fully explain.

Thanks Chirag for this lovely comment on the article and newsletter. Really appreciate it.